WCF service and client do not support HTTP Compression out of the box in .NET 3.5 even if you turn on Dynamic Compression in IIS 6 or 7. It has been fixed in .NET 4 but those who are stuck with .NET 3.5 for foreseeable future, you are out of luck. First of all, it’s IIS fault that it does not enable http compression for SOAP messages even if you turn on Dynamic Compression in IIS 7. Secondly, it’s WCF’s fault that it does not send the Accept-Encoding: gzip, deflate header in http requests to the server, which tells IIS that the client supports compression. Thirdly, it’s again WCF fault that even if you make IIS to send back compressed response, WCF can’t process it since it does not know how to decompress it. So, you have to tweak IIS and System.Net factories to make compression work for WCF services. Compression is key for performance since it can dramatically reduce the data transfer from server to client and thus give significant performance improvement if you are exchanging medium to large data over WAN or internet.

There are two steps – first configure IIS, then configure System.Net. There’s no need to tweak anything in WCF like using some Message Interceptor to inject HTTP Headers as you find people trying to do here, here and here.

Configure IIS to support gzip on SOAP respones

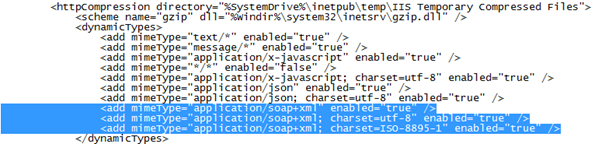

After you have enabled Dynamic Compression on IIS 7 following the guide, you need to add the following block in the <dynamicTypes> section of applicationHost.config file inside C:WindowsSystem32inetsrvconfig folder. Be very careful about the space in mimeType. They need to be exactly the same as you find in response header of SOAP response generated by WCF services.

<add mimeType="application/soap+xml" enabled="true" /> <add mimeType="application/soap+xml; charset=utf-8" enabled="true" /> <add mimeType="application/soap+xml; charset=ISO-8895-1" enabled="true" />

After adding the block, the config file will look like this:

For IIS 6, first you need to first enable dynamic compression and then allow the .svc extension so that IIS compresses responses from WCF services.

Next you need to make WCF send the Accept-Encoding: gzip, deflate header as part of request and then support decompressing a compressed response.

Send proper request header in WCF requests

You need to override the System.Net default WebRequest creator to create HttpWebRequest with compression turned on. First you create a class like this:

public class CompressibleHttpRequestCreator : IWebRequestCreate

{

public CompressibleHttpRequestCreator()

{

}

WebRequest IWebRequestCreate.Create(Uri uri)

{

HttpWebRequest httpWebRequest =

Activator.CreateInstance(typeof(HttpWebRequest),

BindingFlags.CreateInstance | BindingFlags.Public |

BindingFlags.NonPublic | BindingFlags.Instance,

null, new object[] { uri, null }, null) as HttpWebRequest;

if (httpWebRequest == null)

{

return null;

}

httpWebRequest.AutomaticDecompression = DecompressionMethods.GZip |

DecompressionMethods.Deflate;

return httpWebRequest;

}

}

Then on the WCF Client application’s app.config or web.config, you need to put this block inside system.net which tells system.net to use your factory instead of the default one.

<system.net>

<webRequestModules>

<remove prefix="http:"/>

<add prefix="http:" type="WcfHttpCompressionEnabler.CompressibleHttpRequestCreator, WcfHttpCompressionEnabler,

Version=1.0.0.0, Culture=neutral, PublicKeyToken=null" />

</webRequestModules>

</system.net>

That’s it.

I have uploaded a sample project which shows how all these works.