UPDATE: There’s a newer article on this that shows how to create a truly RESTful API and website using the same ASP.NET MVC code.

www.codeproject.com/KB/aspnet/aspnet_mvc_restapi.aspx

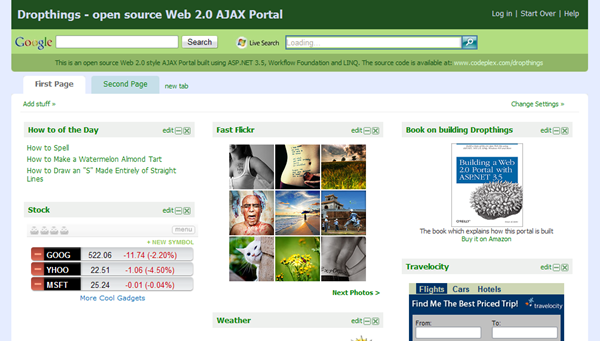

ASP.NET MVC Controllers can directly return objects and collections, without rendering a view, which makes it quite appealing for creating REST like API. The nice extensionless Url provided by MVC makes it handy to build REST services, which means you can create APIs with smart Url like “something.com/API/User/GetUserList”

There are some challenges to solve in order to expose REST API:

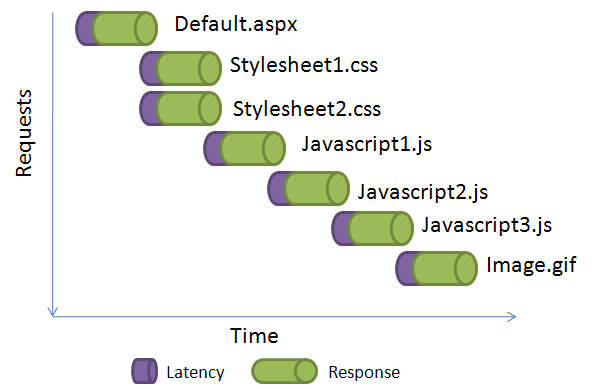

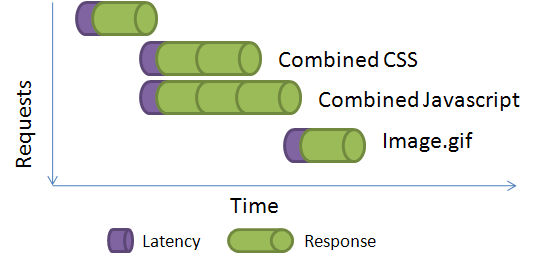

- Based on who is calling your API, you need to be able to speak both Json and plain old Xml (POX). If the call comes from an AJAX front-end, you need to return objects serialized as Json. If it’s coming from some other client, say a PHP website, you need to return plain Xml.

- Similarly you need to be able to understand REST, Json and plain Xml calls. Someone can hit you using REST url, someone can post a Json payload or someone can post Xml payload.

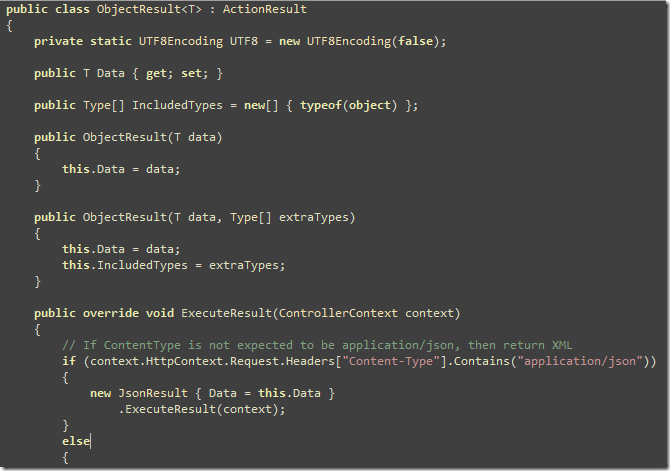

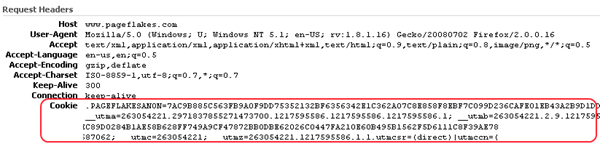

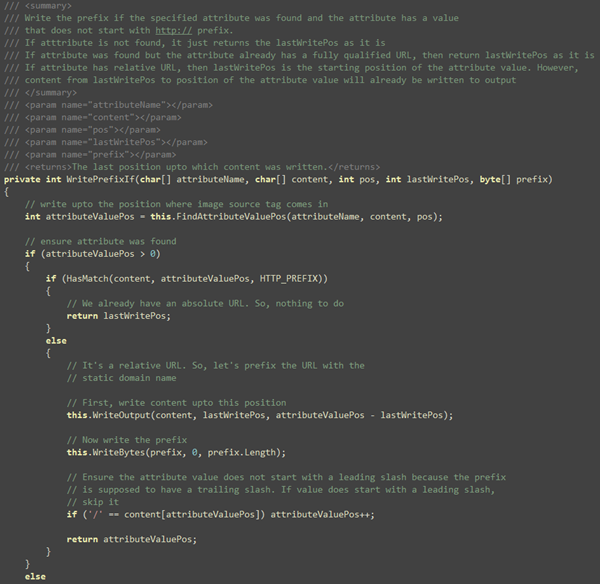

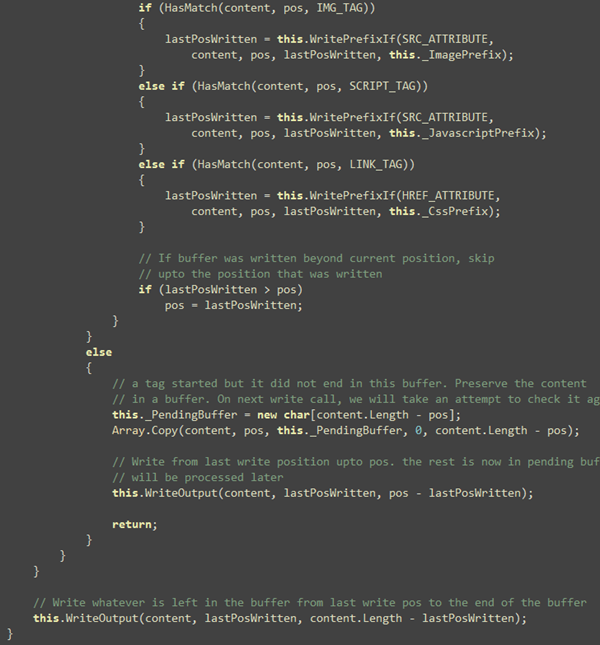

I have created an ObjectResult class which takes an object and generates Xml or Json output automatically looking at the Content-Type header of HttpRequest. AJAX calls send Content-Type=application/json. So, it generates Json as response in that case, but when Content-Type is something else, it does simple Xml Serialzation.

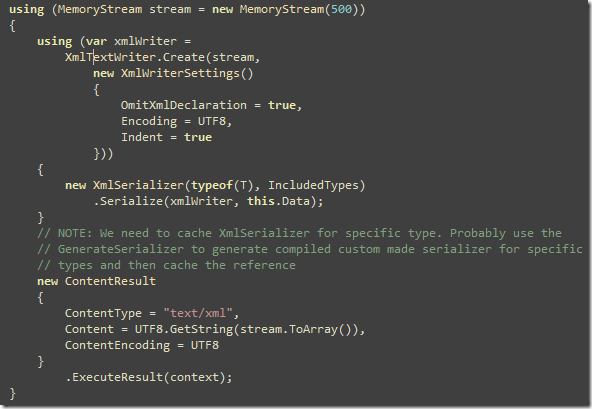

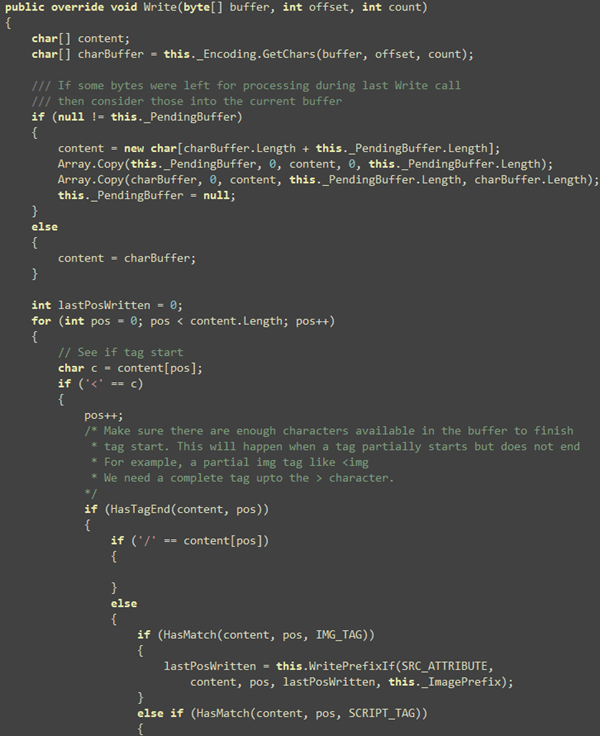

Here’s the ObjectResult that you can use from Controllers to return objects and it takes care of proper serialization method. Above shows the Json serialization, which is quite simple.XmlSerialization is a bit complex though:

Things to note here:

- You have to force UTF8 encoding. Otherwise it produces UTF16 output.

- XML Declaration is skipped because that’s not quite necessary. Wastes bandwidth. If you need it, turn it on.

- I have turned on indenting for better readability. You can turn it off to save bandwidth.

Some of you might be boiling inside looking at my obscure coding style. I love this style! I am spoiled by jQuery. I wish there was a cQuery. I actually started writing one, but it never saw day light just like my hundred other open source attempts.

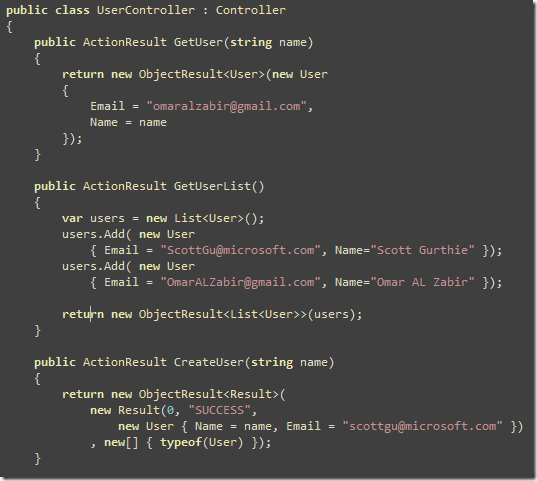

Now back to Object Serialization, we got the serialization done. Now you can return objects from Controller easily:

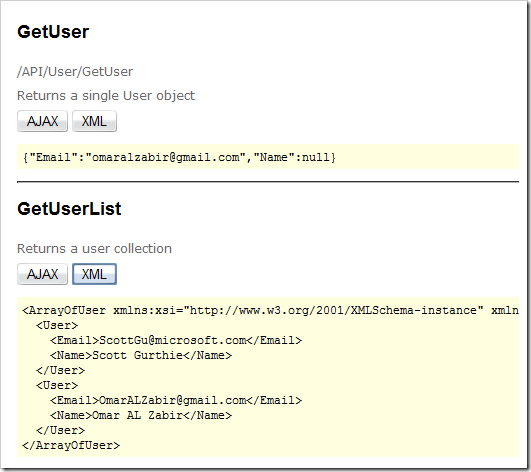

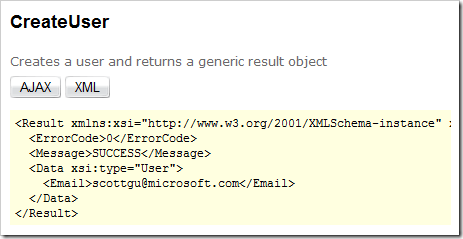

You can use the test web project to call these methods and see the result:

So far you have seen simple object and list serialization. A best practice is to return a common result object that has some status, message and then the real payload. It’s handy when you only need to return some error but no object or list. I use a common Result object that has three properties – ErrorCode (0 by default means success), Message (a string data type) andData which is the real object.

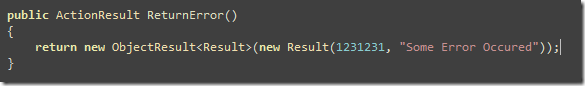

When you want to return only a result with error message, you can do this:

This produces a result like this:

No payload here. So, the return format is always consistent. Those who are consuming service can write a common Xml or Json parsing code to consume both success and failure response. Those who are building API for their website, I humbly request you to return consistent response for both success and failure. It makes our life so easier.

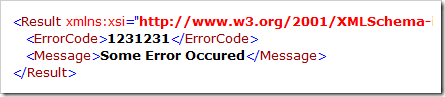

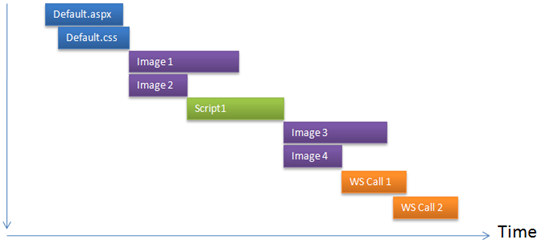

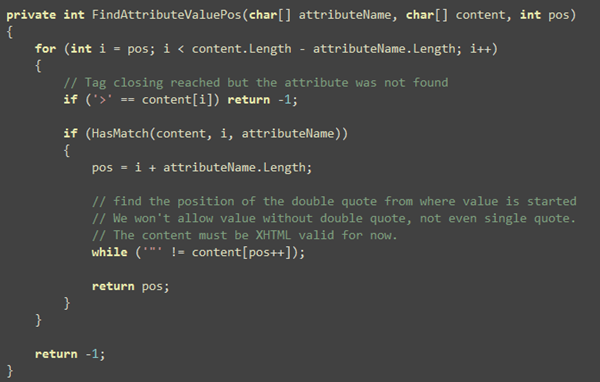

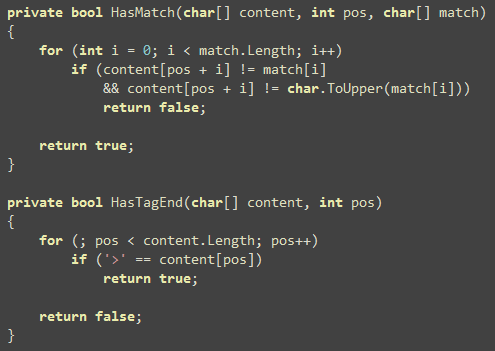

So, far we have only returned objects and lists. Now we need to accept Json and Xml payload, delivered via HTTP POST. Sometimes your client might want to upload a collection of objects in one shot for batch processing. So, they can upload objects using either Json or Xml format. There’s no native support in ASP.NET MVC to automatically parse posted Json or Xml and automatically map to Action parameters. So, I wrote a filter that does it.

This filter intercepts calls going to Action methods and checks whether client has posted Xml or Json. Based on what has been posted, it uses DataContractJsonSerializer or simpleXmlSerializer to convert the payload to objects or collections.

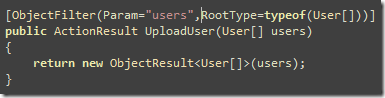

You use this attribute on Action methods like this:

The attribute expects a parameter name where it stores the deserialized object/collection. It also expects a root type that it needs to pass to the deserializer. If you are expecting a single object, specify typeof(SingeObject). If you are expecting a list of objects, specify an array of that object like typeof(SingleObject[])

You can test the project live at this URL:

http://labs.dropthings.com/MvcWebAPI

The code is also available at:

http://code.msdn.microsoft.com/MvcWebAPI

Enjoy!

————

Here’s an Eid gift for my believer brothers. Check out this amazing sitewww.quranexplorer.com/. You will get online recitation, translation – verse by verse. The recitation of Mishari Rashid is something you have to listen to to believe. Try these two recitations to see what I mean:

Sura 97 – Verse 1

Sura 114 – Verse 1

Press the “Play” icon at bottom left (hard to find).