While working on open source projects, you have to frequently build your code, clean up all temporary files, remove your own passwords, connections strings from web.config, then create a binary deployment package in a zip format and then commit and git push to GitHub. Let’s automate all these using a configurable powershell script.

While working on open source projects, you have to frequently build your code, clean up all temporary files, remove your own passwords, connections strings from web.config, then create a binary deployment package in a zip format and then commit and git push to GitHub. Let’s automate all these using a configurable powershell script.

Here’s the full script.

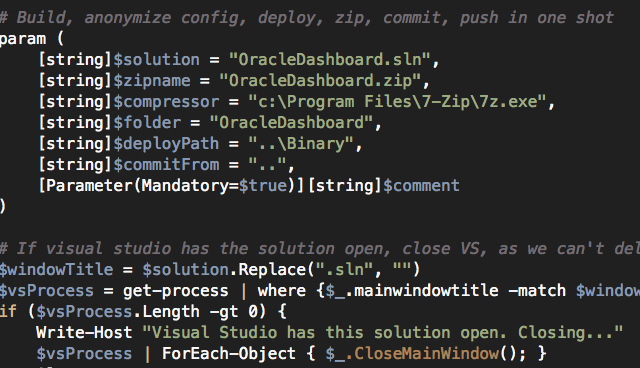

First step, let’s define the parameters for the script with some default values:

param (

[string]$solution = "OracleDashboard.sln",

[string]$zipname = "OracleDashboard.zip",

[string]$compressor = "c:\Program Files\7-Zip\7z.exe",

[string]$folder = "OracleDashboard",

[string]$deployPath = "..\Binary",

[string]$commitFrom = "..",

[Parameter(Mandatory=$true)][string]$comment

)

Some description of these parameters:

- $solution = path of the solution file relative to the script location

- $zipname = name of the zip file.

- $compressor = 7-zip’s 7z.exe file path.

- $folder = the folder that contains the code, which is zipped.

- $deployPath = relative path where the zip file will be moved to.

- $commitFrom = relative path where the script will run git commit and git push from.

- $comment = A comment for the git commit.

The first thing the script does is look for the solution open in Visual Studio and close it. You can comment this section out if you want. But if Visual Studio is open, then /obj folder cannot be deleted.

# If visual studio has the solution open, close VS, as we can't delete obj folder while it is open

$windowTitle = $solution.Replace(".sln", "")

$vsProcess = get-process | where {$_.mainwindowtitle -match $windowTitle -and $_.ProcessName -eq "devenv"}

if ($vsProcess.Length -gt 0) {

Write-Host "Visual Studio has this solution open. Closing..."

$vsProcess | ForEach-Object { $_.CloseMainWindow(); }

Sleep 5

Read-Host "Press ENTER to proceed if Visual Studio is closed"

$vsProcess = get-process | where {$_.mainwindowtitle -match $windowTitle -and $_.ProcessName -eq "devenv"}

if ($vsProcess.Length -gt 0) {

Write-Host "Visual Studio still has the solution open. Aborting."

Return

}

}

Next step is to do some spring cleaning:

Push-Location

if (Test-Path $zipname) { rm $zipname; }

# Clean up deploy folder

rm $deployPath\*.* -Force -Recurse

First remember the current path. We have to come back to this path after we are done. Then remove the zip file if it already exists. Then cleanup the $deployPath. You can remove this if you want to keep old deployment packages. Then you have to handle generation of unique file names for the packages.

Now, let’s build and remove /obj folder:

# Build new version

msbuild /verbosity:minimal $solution

# Delete obj

if (Test-Path $folder\obj) { rm $folder\obj -Force -Recurse }

Next step: remove all sensitive information from the web.config, which includes connection strings, authorization block, appSettings entries etc. This is all up to you to customize:

# backup the web.config and remove sensitive entries before pushing to git, eg connectionString [string]$filename = gi $folder\web.config [string]$backup = [System.IO.File]::ReadAllText($filename) $xml =

[xml][/xml]

$backup $xml.PreserveWhitespace = $true foreach($n in $xml.configuration.connectionStrings.add) { $n.ParentNode.RemoveChild($n); } # Anonymize any sensitive appSettings entry foreach($n in $xml.configuration.appSettings.add) { switch($n.key) { "Password" { $n.value = "Password" } } } # Remove authorization blocks $xml.configuration.'system.web'.authorization.RemoveAll() $xml.Save($filename)

Finally, let’s run some regular expression check to ensure the web.config does not have any sensitive information left accidentally. Again, this is all up to you to customize.

# verify if web.config still contains any sensitive info

[string]$config = gc $folder\web.config

if ( ($config -match 'connectionString="\w+') -or ($config -match 'users="\w+') ) {

Write-Host "Configuration file is not cleaned."

# Restore web.config

[System.IO.File]::WriteAllText($filename, $backup)

Exit

}

Now time to compress the source folder and create a zip file using 7-zip.

# Compress the solution folder and copy to deploy folder cmd /c $compressor a -tzip $zipname $folder -r cmd /c copy $zipname $deployPath /Y cmd /c del $zipname

Finally git commit and push:

# Commit and push to GitHub cd $commitFrom git pull git add -A *.* git commit -a -m $comment git push Pop-Location

And last step is to restore your own web.config, that was anonymized:

# Restore web.config [System.IO.File]::WriteAllText($filename, $backup)

That’s it. Now all you have to do is, just hit ./gitpush.ps1 from Powershell command line and you are done!