You usually write unit test and integration test code separately

using different technologies. For example, for unit test, you use

some mocking framework like Moq to

do the mocking. For integration test, you do not use any mocking,

just some test classes that hits some service or facade to do

end-to-end integration test. However, sometimes you see that the

integration and unit test are more or less same, they test the same

class using its interface and perform the same tests against the

same expectation. For example, if you think about a WCF service,

you write unit test to test the ServiceContract using the

interface where you use some mocking framework to mock the

interface of the WCF Service. If you look at the following example,

I am using Moq to test IPortalService interface which is a

ServiceContract for a WCF service. I am using xUnit and

SubSpec to do BDD style tests.

[Specification] public void GetAllWidgetDefinitions_should_return_all_widget_in_widget_gallery() { var portalServiceMock = new Mock<IPortalService>(); var portalService = portalServiceMock.Object; "Given a already populated widget gallery".Context(() => { portalServiceMock.Setup(p => p.GetAllWidgetDefinitions()) .Returns(new Widget[] { new Widget { ID = 1 }, new Widget { ID = 2 }}) .Verifiable(); }); Widget[] widgets = default(Widget[]); "When a widget is added to one of the page".Do(() => { widgets = portalService.GetAllWidgetDefinitions(); }); "It should create the widget on the first row and first

column on the same page".Assert(() => { portalServiceMock.VerifyAll(); Assert.NotEqual(0, widgets.Length); Assert.NotEqual(0, widgets[0].ID); }); }

Now when I want to do an end-to-end test to see if the service

really works by connecting all the wires, then I write a test like

this:

[Specification] public void GetAllWidgetDefinitions_should_return_all_widget_in_widget_gallery() { var portalService = new ManageCustomerPortalClient(); "Given a already populated widget gallery".Context(() => { }); Widget[] widgets = default(Widget[]); "When a widget is added to one of the page".Do(() => { widgets = portalService.GetAllWidgetDefinitions(); }); "It should create the widget on the first row and

first column on the same page".Assert(() => { Assert.NotEqual(0, widgets.Length); Assert.NotEqual(0, widgets[0].ID); }); }

If you look at the difference, it’s very little. The

mockings are gone. The same operation is called using the same

parameters. The same Asserts are done to test against the

same expectation. It’s an awful duplication of code.

Conditional compilation saves the day. You could write the unit

test using some conditional compilation directive so that in real

environment, those mockings are gone and the real stuff gets run.

For example, the following code does both unit test and integration

test for me. All I do is turn on/off some conditional

compilation.

[Specification] public void GetAllWidgetDefinitions_should_return_all_widget_in_widget_gallery() { #if MOCK var portalServiceMock = new Mock<IPortalService>(); var portalService = portalServiceMock.Object; #else var portalService = new ManageCustomerPortalClient(); #endif "Given a already populated widget gallery".Context(() => { #if MOCK portalServiceMock.Setup(p => p.GetAllWidgetDefinitions()) .Returns(new Widget[] { new Widget { ID = 1 }, new Widget { ID = 2 }}) .Verifiable(); #endif }); Widget[] widgets = default(Widget[]); "When a widget is added to one of the page".Do(() => { widgets = portalService.GetAllWidgetDefinitions(); }); "It should create the widget on the first row and

first column on the same page".Assert(() => { #if MOCK portalServiceMock.VerifyAll(); #endif Assert.NotEqual(0, widgets.Length); Assert.NotEqual(0, widgets[0].ID); }); }

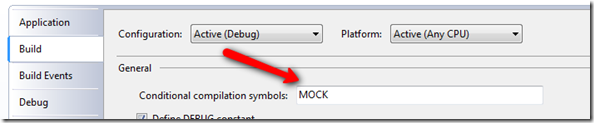

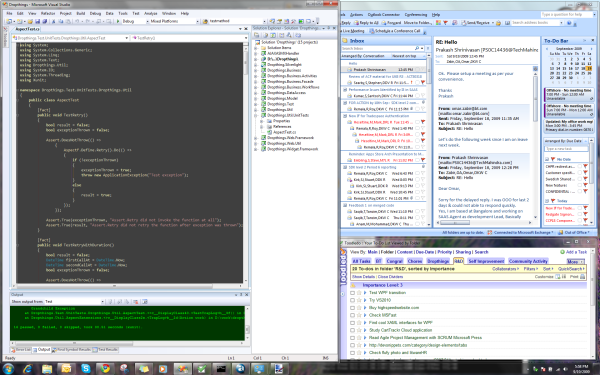

The code is now in unit test mode. When I run this, it performs

unit test using Moq. When I want to switch to integration test

mode, all I do is take out the “MOCK” word from Project

Properties->Build->Conditional Compilation.

Hope this gives you ideas to save unit test and integration test

coding time.