If you are storing ASP.NET session in webserver memory, then when a webserver dies, you lose all the sessions on that server. This results in poor customer experience, as they are logged out in the middle of some journey and has to log back in, losing their state, sometimes resulting in data loss. A common solution to avoid such problem is to store session outside the webserver. You can use a separate cluster of servers where you run some software that lets your application store session. Some popular technologies for storing session out-of-process are SQL Server, Memcached, Redis, NoSQL databases like Couchbase. Here I present some performance test results for an ASP.NET MVC 3 website using SQL Server vs Redis vs Couchbase. Continue reading Storing ASP.NET session outside webserver – SQL Server vs Redis vs Couchbase

Tag: asp.net MVC

Deploy ASP.NET MVC on IIS 6, solve 404, compression and performance problems

There are several problems with ASP.NET MVC application when

deployed on IIS 6.0:

- Extensionless URLs give 404 unless some URL Rewrite module is

used or wildcard mapping is enabled - IIS 6.0 built-in compression does not work for dynamic

requests. As a result, ASP.NET pages are served uncompressed

resulting in poor site load speed. - Mapping wildcard extension to ASP.NET introduces the following

problems:- Slow performance as all static files get handled by ASP.NET and

ASP.NET reads the file from file system on every call - Expires headers doesn’t work for static content as IIS does not

serve them anymore. Learn about benefits of expires header from

here. ASP.NET serves a fixed expires header that makes content

expire in a day. - Cache-Control header does not produce max-age properly and thus

caching does not work as expected. Learn about caching best

practices from

here.

- Slow performance as all static files get handled by ASP.NET and

- After deploying on a domain as the root site, the homepage

produces HTTP 404.

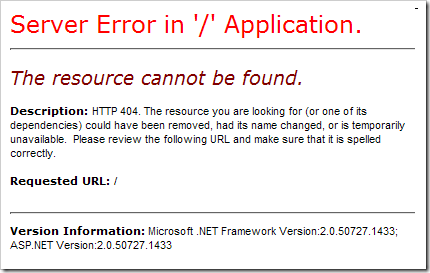

Problem 1: Visiting your website’s homepage gives 404 when

hosted on a domain

You have done the wildcard mapping, mapped .mvc extention to

ASP.NET ISAPI handler, written the route mapping for Default.aspx

or default.aspx (lowercase), but still when you visit your homepage

after deployment, you get:

You will find people banging their heads on the wall here:

- http://forums.asp.net/t/1237051.aspx

- http://forums.asp.net/t/1253599.aspx

- http://forums.asp.net/p/1239943/2294813.aspx

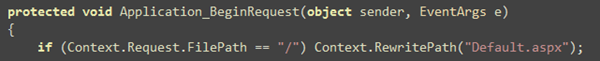

Solution is to capture hits going to “/” and then rewrite it to

Default.aspx:

You can apply this approach to any URL that ASP.NET MVC is not

handling for you and it should handle. Just see the URL reported on

the 404 error page and then rewrite it to a proper URL.

Problem 2: IIS 6 compression is no longer working after

wildcard mapping

When you enable wildcard mapping, IIS 6 compression no longer

works for extensionless URL because IIS 6 does not see any

extension which is defined in IIS Metabase. You can learn about IIS

6 compression feature and how to configure it properly from

my earlier post.

Solution is to use an HttpModule to do the compression for

dynamic requests.

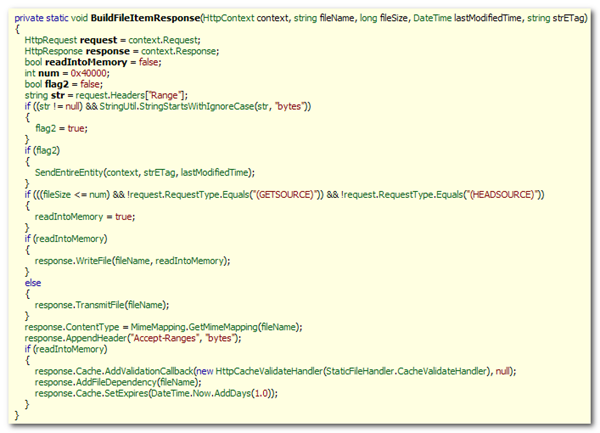

Problem 3: ASP.NET ISAPI does not cache Static Files

When ASP.NET’s DefaultHttpHandler serves static files, it

does not cache the files in-memory or in ASP.NET cache. As a

result, every hit to static file results in a File read. Below is

the decompiled code in DefaultHttpHandler when it handles a

static file. As you see here, it makes a file read on every hit and

it only set the expiration to one day in future. Moreover, it

generates ETag for each file based on file’s modified date.

For best caching efficiency, we need to get rid of that

ETag, produce an expiry date on far future (like 30 days),

and produce Cache-Control header which offers better control

over caching.

So, we need to write a custom static file handler that will

cache small files like images, Javascripts, CSS, HTML and so on in

ASP.NET cache and serve the files directly from cache instead of

hitting the disk. Here are the steps:

- Install an HttpModule that installs a Compression Stream

on Response.Filter so that anything written on Response gets

compressed. This serves dynamic requests. - Replace ASP.NET’s DefaultHttpHandler that listens on *.*

for static files. - Write our own Http Handler that will deliver compressed

response for static resources like Javascript, CSS, and HTML.

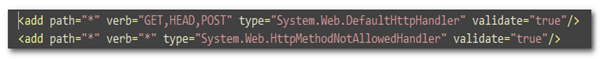

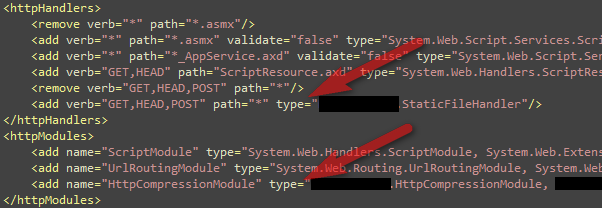

Here’s the mapping in ASP.NET’s web.config for the

DefaultHttpHandler. You will have to replace this with your

own handler in order to serve static files compressed and

cached.

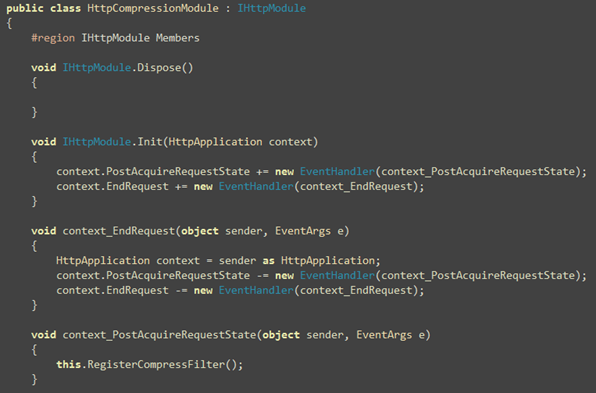

Solution 1: An Http Module to compress dynamic requests

First, you need to serve compressed responses that are served by

the MvcHandler or ASP.NET’s default Page Handler. The

following HttpCompressionModule hooks on the

Response.Filter and installs a GZipStream or

DeflateStream on it so that whatever is written on the

Response stream, it gets compressed.

These are formalities for a regular HttpModule. The real

hook is installed as below:

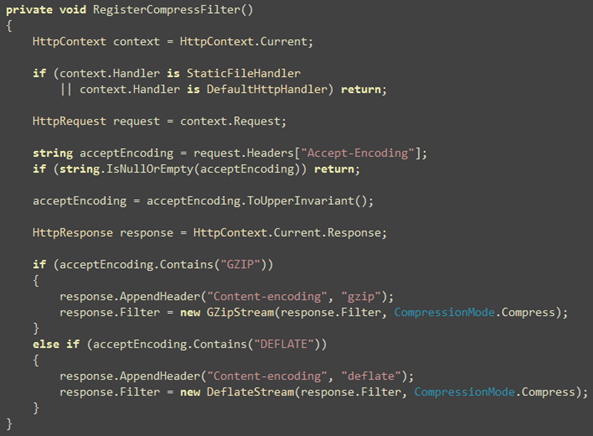

Here you see we ignore requests that are handled by ASP.NET’s

DefaultHttpHandler and our own StaticFileHandler that

you will see in next section. After that, it checks whether the

request allows content to be compressed. Accept-Encoding

header contains “gzip” or “deflate” or both when browser supports

compressed content. So, when browser supports compressed content, a

Response Filter is installed to compress the output.

Solution 2: An Http Module to compress and cache static file

requests

Here’s how the handler works:

- Hooks on *.* so that all unhandled requests get served by the

handler - Handles some specific files like js, css, html, graphics files.

Anything else, it lets ASP.NET transmit it - The extensions it handles itself, it caches the file content so

that subsequent requests are served from cache - It allows compression of some specific extensions like js, css,

html. It does not compress graphics files or any other

extension.

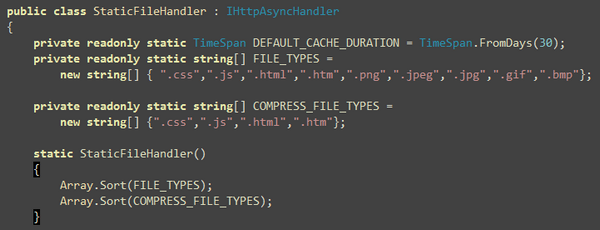

Let’s start with the handler code:

Here you will find the extensions the handler handles and the

extensions it compresses. You should only put files that are text

files in the COMPRESS_FILE_TYPES.

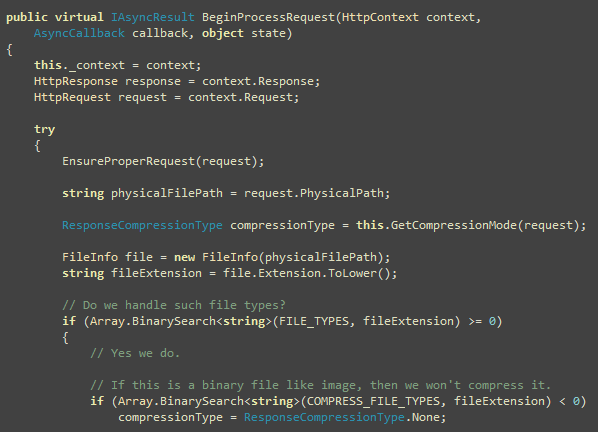

Now start handling each request from

BeginProcessRequest.

Here you decide the compression mode based on

Accept-Encoding header. If browser does not support

compression, do not perform any compression. Then check if the file

being requested falls in one of the extensions that we support. If

not, let ASP.NET handle it. You will see soon how.

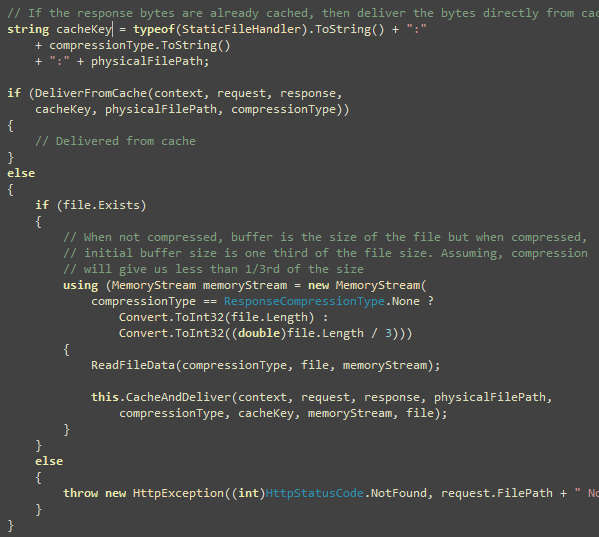

Calculate the cache key based on the compression mode and the

physical path of the file. This ensures that no matter what the URL

requested, we have one cache entry for one physical file. Physical

file path won’t be different for the same file. Compression mode is

used in the cache key because we need to store different copy of

the file’s content in ASP.NET cache based on Compression Mode. So,

there will be one uncompressed version, a gzip compressed version

and a deflate compressed version.

Next check if the file exits. If not, throw HTTP 404. Then

create a memory stream that will hold the bytes for the file or the

compressed content. Then read the file and write in the memory

stream either directly or via a GZip or Deflate stream. Then cache

the bytes in the memory stream and deliver to response. You will

see the ReadFileData and CacheAndDeliver functions

soon.

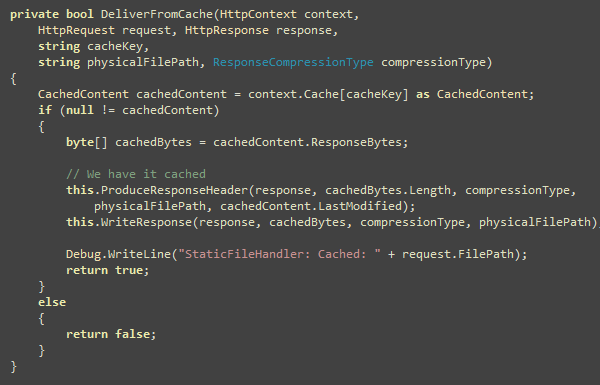

This function delivers content directly from ASP.NET cache. The

code is simple, read from cache and write to the response.

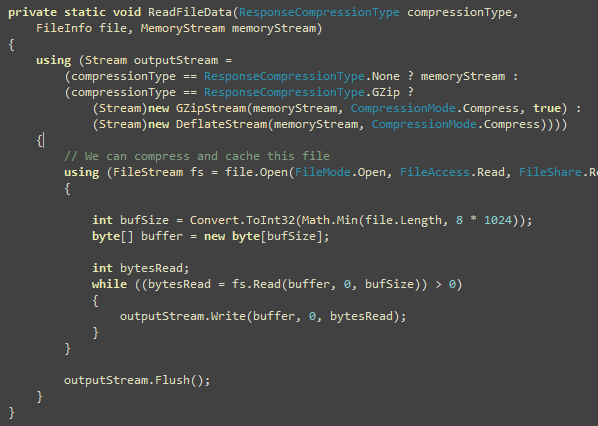

When the content is not available in cache, read the file bytes

and store in a memory stream either as it is or compressed based on

what compression mode you decided before:

Here bytes are read in chunk in order to avoid large amount of

memory allocation. You could read the whole file in one shot and

store in a byte array same as the size of the file length. But I

wanted to save memory allocation. Do a performance test to figure

out if reading in 8K chunk is not the best approach for you.

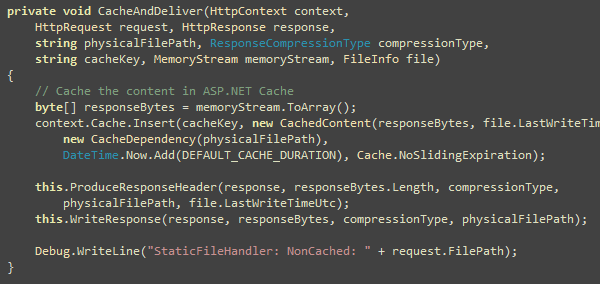

Now you have the bytes to write to the response. Next step is to

cache it and then deliver it.

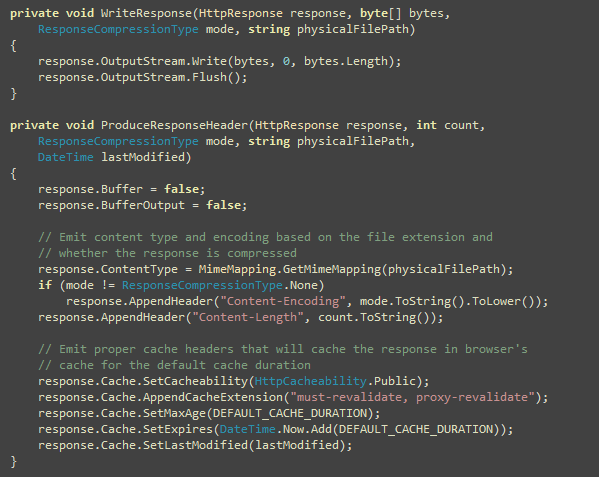

Now the two functions that you have seen several times and have

been wondering what they do. Here they are:

WriteResponse has no tricks, but

ProduceResponseHeader has much wisdom in it. First it turns

off response buffering so that ASP.NET does not store the written

bytes in any internal buffer. This saves some memory allocation.

Then it produces proper cache headers to cache the file in browser

and proxy for 30 days, ensures proxy revalidate the file after the

expiry date and also produces the Last-Modified date from

the file’s last write time in UTC.

How to use it

Get the HttpCompressionModule and

StaticFileHandler from:

http://code.msdn.microsoft.com/fastmvc

Then install them in web.config. First you install the

StaticFileHandler by removing the existing mapping for

path=”*” and then you install the HttpCompressionModule.

That’s it! Enjoy a faster and more responsive ASP.NET MVC

website deployed on IIS 6.0.

| Share this post : |