Imagine this: you are a student of Oxford and you want to join a program in Cambridge. Cambridge wants to pull all the courses you have done in Oxford, along with your course results and automatically credit them, so that you don’t have to repeat the courses. Eventually when Cambridge decides to award you a degree, they can automatically check that you have done all the courses required by their program, either in Cambridge or in Oxford, Harvard, MIT or any other universities in the world. Would it not be nice to have a common system where all your courses and results from any university in the world, are securely stored and universities can exchange with each other what you have done, fully automatically? There would be no need to get transcripts printed, mailed, certified etc anymore. It would be done entirely online securely.

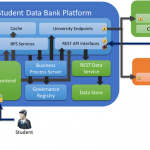

Let’s build such an imaginary system using ASP.NET MVC and WebAPI, and expose that over an ESB like WSO2 ESB. You don’t have to use any ESB. You can just run the ASP.NET app directly. ESB is there to offer secure, reliable exposure of the services.

Let’s define what features we want on this system:

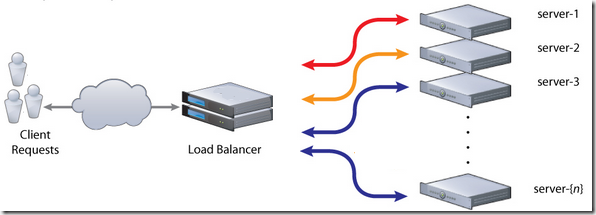

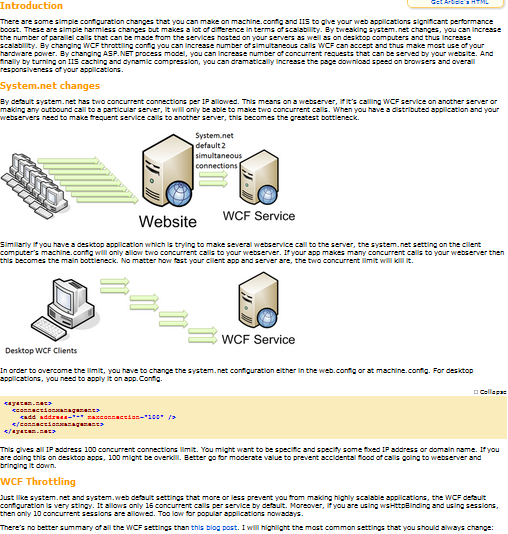

StudentDataBank.org (SDB – an imaginary org) is an organization that offers secure online storage and controlled exchange of student records between universities, colleges, schools and other types of Educational Institutes (EI). An EI can establish a secure interface with Student Data Bank and upload its Courses, Programs, Students and Student’s Course Results. Then another EI can request a Student’s Record to be fetched from the offering EI. Student Data Bank (SDB) takes care of securely validating the request and fetching Student Records from an EI’s systems via the secure interface that EI has already established with the SDB. Thus SDB works as a broker between multiple EIs, empowering each to share student records with each other in a secure and auditable manner.

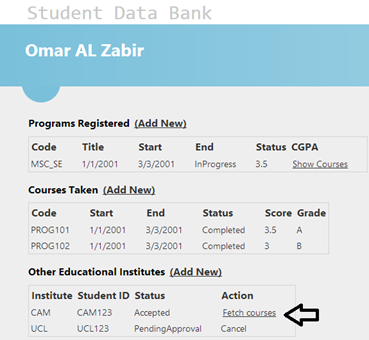

An awarding EI can initiate the process of awarding a degree to a student and in the process automatically establish whether the awardee student has completed all the required courses from the awarding EI, as well as from other EIs that the student claims to have attended required courses from. Each EI has a way to define what requirements their courses meet, so that courses can be accepted from other EIs automatically while awarding a degree to a student.

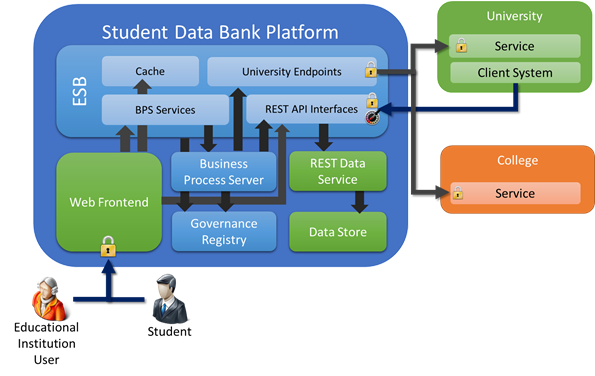

The Web site looks like this:

Clicking on that Fetch Course triggers a workflow, which fetches the courses from the University, via the interface integration done with the University.

Read the full CodeProject article how this is done:

http://www.codeproject.com/Articles/895310/Student-Data-Bank-An-ASP-NET-MVC-WebAPI-EF-Codefir