When your database tables start accumulating thousands of rows

and many users start working on the same table concurrently, SELECT

queries on the tables start producing lock contentions and

transaction deadlocks. This is a common problem in any high volume

website. As soon as you start getting several concurrent users

hitting your website that results in SELECT queries on some large

table like aspnet_users table that are also being updated

very frequently, you end up having one of these errors:

Transaction (Process ID ##) was deadlocked on lock resources

with another process and has been chosen as the deadlock victim.

Rerun the transaction.

Or,

Timeout Expired. The Timeout Period Elapsed Prior To Completion

Of The Operation Or The Server Is Not Responding.

The solution to these problems are – use proper index on

the table and use transaction isolation level Read

Uncommitted or WITH (NOLOCK) in your SELECT queries. So,

if you had a query like this:

SELECT * FORM aspnet_users

where ApplicationID =’xxx’ AND LoweredUserName = 'someuser'

You should end up having any of the above errors under high

load. There are two ways to solve this:

SET TRANSACTION LEVEL READ UNCOMMITTED;

SELECT * FROM aspnet_Users

WHERE ApplicationID =’xxx’ AND LoweredUserName = 'someuser'

Or use the WITH (NOLOCK):

SELECT * FROM aspnet_Users WITH (NOLOCK)

WHERE ApplicationID =’xxx’ AND LoweredUserName = 'someuser'

The reason for the errors are that since aspnet_users is

a high read and high write table, during read, the table is

partially locked and during write, it is also locked. So, when the

locks overlap on each other from several queries and especially

when there’s a query that’s trying to read a large

number of rows and thus locking large number of rows, some of the

queries either timeout or produce deadlocks.

Linq to Sql does not produce queries with the WITH

(NOLOCK) option nor does it use READ UNCOMMITTED. So, if

you are using Linq to SQL queries, you are going to end up with any

of these problems on production pretty soon when your site becomes

highly popular.

For example, here’s a very simple query:

using (var db = new DropthingsDataContext()) { var user = db.aspnet_Users.First(); var pages = user.Pages.ToList(); }

DropthingsDataContext is a DataContext built from Dropthings database.

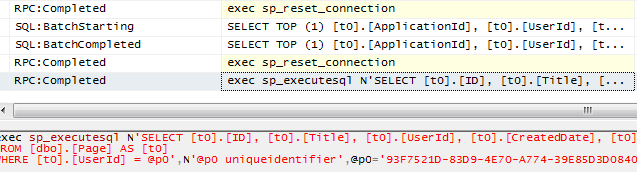

When you attach SQL Profiler, you get this:

You see none of the queries have READ UNCOMMITTED or WITH

(NOLOCK).

The fix is to do this:

using (var db = new DropthingsDataContext2()) { db.Connection.Open(); db.ExecuteCommand("SET TRANSACTION ISOLATION LEVEL READ UNCOMMITTED;"); var user = db.aspnet_Users.First(); var pages = user.Pages.ToList(); }

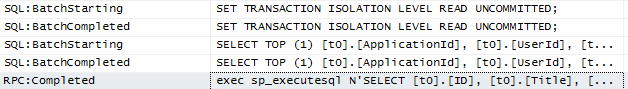

This will result in the following profiler output

As you see, both queries execute within the same connection and

the isolation level is set before the queries execute. So, both

queries enjoy the isolation level.

Now there’s a catch, the connection does not close. This

seems to be a bug in the DataContext that when it is disposed, it

does not dispose the connection it is holding onto.

In order to solve this, I have made a child class of the

DropthingsDataContext named DropthingsDataContext2

which overrides the Dispose method and closes the

connection.

class DropthingsDataContext2 : DropthingsDataContext, IDisposable { public new void Dispose() { if (base.Connection != null) if (base.Connection.State != System.Data.ConnectionState.Closed) { base.Connection.Close(); base.Connection.Dispose(); } base.Dispose(); } }

This solved the connection problem.

There you have it, no more transaction deadlock or lock

contention from Linq to SQL queries. But remember, this is only to

eliminate such problems when your database already has the right

indexes. If you do not have the proper index, then you will end up

having lock contention and query timeouts anyway.

There’s one more catch, READ UNCOMMITTED will return rows

from transactions that have not completed yet. So, you might be

reading rows from transactions that will rollback. Since

that’s generally an exceptional scenario, you are more or

less safe with uncommitted read, but not for financial applications

where transaction rollback is a common scenario. In such case, go

for committed read or repeatable read.

There’s another way you can achieve the same, which seems

to work, that is using .NET Transactions. Here’s the code

snippet:

using (var transaction = new TransactionScope( TransactionScopeOption.RequiresNew, new TransactionOptions() { IsolationLevel = IsolationLevel.ReadUncommitted, Timeout = TimeSpan.FromSeconds(30) })) { using (var db = new DropthingsDataContext()) { var user = db.aspnet_Users.First(); var pages = user.Pages.ToList(); transaction.Complete(); } }

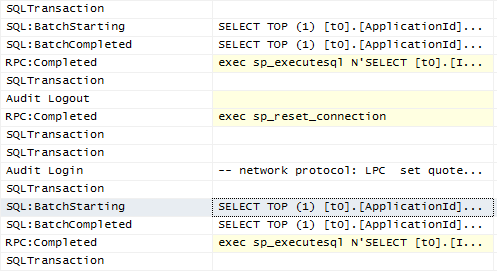

Profiler shows a transaction begins and ends:

The downside is it wraps your calls in a transaction. So, you

are unnecessarily creating transactions even for SELECT operations.

When you do this hundred times per second on a web application,

it’s a significant over head.

Some really good examples of deadlocks are given in this

article:

http://www.code-magazine.com/article.aspx?quickid=0309101&page=2

I highly recommend it.

>>>Linq to Sql does not produce queries with the WITH (NOLOCK) option nor does it use READ UNCOMMITTED

This is a bad example of playing with isolation level, there are already correct objects in .NET Framework to achive this:

So instead of:

db.ExecuteCommand(“SET TRANSACTION ISOLATION LEVEL READ UNCOMMITTED;”);

Use:

using (new TransactionScope(TransactionScopeOption.Required, new TransactionOptions { IsolationLevel = IsolationLevel.ReadUncommitted }))

{

//Your db Operation

}

Check: http://www.hanselman.com/…/GettingLINQToSQLAndLINQToEntitiesToUseNOLOCK.aspx

Pls search the internet prior submitting this kind of post.

Next, not sure about the Dispose Bug of DataContext, as reflector shows that it is calling the internal provider Dispose method which in turn calls the ConnectionManger DisposeConnection Method.

System.Transactions has significant overhead. I have never been able to use it on a high volume website without making CPU go 100% and Req/sec come down to 1/10th. It's made for enterprise applications, not for high volume websites.

Also I saw the post from ScottH, and I tried it, and it made my web site go down. As I said, System.Transactions is for Enterprise Apps, not for high volume web apps.

Dont agree, as per MSDN:

“In addition to the programmability enhancements, System.Transactions and ADO.NET can work together to coordinate optimizations when working with transactions. A promotable transaction is a lightweight (local) transaction that can be automatically promoted to a fully distributed transaction on an as-needed basis.

In ADO.NET 2.0, System.Data.SqlClient adds support for promotable transactions when working with SQL Server 2005. A promotable transaction does not invoke the added overhead of a distributed transaction unless the added overhead is required. Promotable transactions are automatic require no intervention from the developer.”

Maybe you have other issues in your design/architecture. Try consult with SO guys they are running on L2S and Does not have this issue.

In either case you should not claim that L2S does not has the support. “Linq to Sql does not produce queries with the WITH (NOLOCK) option nor does it use READ UNCOMMITTED”

Correct your post or mentioned whatever your findings with TransactionScope otherwise it conveys wrong message to the community.

Dude, look at the profiler trace. System Transaction is unnecessarily opening a transaction where I am just doing a SELECT! Can't you see the difference between the different profiler traces?

What you are showing me here is Distributed Transaction. Even local System.Transaction brings a high volume site down, let alone distributed transaction. You never, I repeat, never use DTC on a web app. It's not made for web apps. I know what I am taking about because I have tested this in a real project. Don't just read text books. Build a sample web site, do a load test and see yourself.

Linq to Sql does not produce queries with the WITH (NOLOCK) option nor does it use READ UNCOMMITTED. It's the System.Transaction that opens a transaction in READ UNCOMMITTED mode. L2S has no credit here. Dude, read the MSDN!

I have a production database with a table of 100,000 rows. Simple update query like

“Update Table X set x.Field=2

where Id=2 “

started failing. Looked into the Index it was highly fragmented. i optimized it but it became fragmented quickly. Then i just drop that table keeping a backup and created a new table with the same structure and dumped the data it ran fine. i wonder why would that happen any ideas?

Oh my table was a dynamically created by my application.

Can you send the CREATE TABLE script, the indexes and some sample queries?

Looks like you need some weekly REINDEX job on the table which basically drops clustered index and recreates it.

No I dont see anything in Profiler opening a transaction:

set quoted_identifier on

set arithabort off

set numeric_roundabort off

set ansi_warnings on

set ansi_padding on

set ansi_nulls on

set concat_null_yields_null on

set cursor_close_on_commit off

set implicit_transactions off

set language us_english

set dateformat mdy

set datefirst 7

set transaction isolation level read uncommitted

Again it is not a DTC it is just setting the transaction isolation level, no overhead at all as what you are doing it does progrmatically with no string command and hacks.

I have enough of this nonsense.

Regarding your philoshopy with L2S with System.Transaction reminds me

“I dont eat my mouth eats”

Dear Omar,

This is a very practical post. After reading the comments above I did some research and found that others use and recommend “Snapshot Isolation” if you use SqlServer 2005 or later.

For example, Jeff encounter the same problem on his StackOverflow.com, so he posted this same question on StackOverflow at stackoverflow.com/…/diagnosing-deadlocks-in-sql-server-2005

And, it seems like after feedback, he decides to use the following

ALTER Database [StackOverflow.Beta] SET READ_COMMITTED_SNAPSHOT ON

Then I find this MSDN article on the theory and implementation on Snapshot Isolation at msdn.microsoft.com/…/tcbchxcb(VS.80).aspx

I'd like to ask if you already knew and tried this Snapshot approach and if yes, did you also find it de-grade the performance of your site?

If you haven't tried this method, do you mind try it out and let us know if it works for you?

Thanks,

Ray.

Thank you for submitting this cool story – Trackback from PimpThisBlog.com

I was trying to delete records from database and sometimes on production, the SubmitChanges() goes through and updates the associated entities yet the data does not gets deleted in the database. This is happening only in production and I could not replicate the same on stage or on my local machine. Now the table in which I am performing the delete transaction does have primary keys.

My code reads like

Any suggestions ??

[Transactional(TransactionalTypes.TransactionScope)]

private void DataPortal_Delete(EntityScenarioCriteria criteria)

{

try

{

using (var ctx = ContextManager.GetManager(da.Database.MediaGlobalBilling))

{

var scenario = (from sc in ctx.DataContext.Scenarios where sc.ScenarioID == criteria.ScenarioId select sc).First();

LoadProperty(ScenarioIdProperty, criteria.ScenarioId);

LoadProperty(ScenarioNameProperty, scenario.ScenarioName);

LoadProperty(YearIdProperty, criteria.YearId);

LoadProperty(UserIdProperty, GMBIdentity.CurrentIdentity.UserId);

EntityId = criteria.EntityId;

YearId = criteria.YearId;

MonthId = criteria.MonthId;

scenariodata.VendorScenarioMonth[] vendorScenarioMonths;

vendorScenarioMonths = (from vsm in ctx.DataContext.VendorScenarioMonths

where vsm.EntityID == criteria.EntityId

&& vsm.YearID == criteria.YearId

&& vsm.ScenarioID == criteria.ScenarioId

select vsm).ToArray();

foreach (var entry in vendorScenarioMonths)

{

ctx.DataContext.DeleteEntityScenario(EntityId, ScenarioId, YearId, entry.MonthId);

ctx.DataContext.SubmitChanges();

}

}

}

catch (Exception ex)

{

Guid correlationId = EventLog.Write(ex.ToString(), System.Diagnostics.EventLogEntryType.Error);

throw ex;

}

}

I get a timeout error for the first 2 times when i run the website. Please help me to solve this problem.

NOTE: I have tried these methods also

1) web.config timeout increase Connect Timeout=30000

2) NOLOCK

3) Connection Pool

4) commandTimeout=”9600″

5) Authentication timeout=”2880″

6) and Finally i tried TransactionScope also

one Important Thing i use synonms to get data/table to access tahat table so Please let me know solutions

i use synonyms to get the values from another database. its not a session time out issue. please read my problem give solutions

some of the images on this page are not shown – bad html

It could be many things, but your index FILLFACTOR option should reduce fragmentation from frequent inserts. When you create your index on your table, use the FILLFACTOR option, set it to say 90 (90%) and see if it makes a difference, if it does a little bit try lowering the number, say 85 until you find a sweet spot. I would recommend a regular reindexing strategy based on your needs as well if you’re not already doing that.

Also, if you’re using uniqueidentifier columns as keys it can cause fragmentation issues on indexes, try int/bigint instead

Ray, I think Read Committed Snapshot isolation (RCSI) is a good solution for this. Well recommended.

Bear in mind though that it can have an impact on resources, and can affect the locking mechanics on your entire database (it’s a database option). With this set on, each transaction gets it’s own readable snapshot of the data and is therefore unaffected by external locking

From a DBA angle, even though the nolock hint or read uncommitted seems like a turbo button to resolve issues like this, it does go against the whole point of having locking on the objects and that is to prevent dirty reads and enforce ACID isolation.

I’m considering moving the aspnet tables to it’s own database so that we can apply RCSI to the database and it only impacts on those objects then.